HA Private Registry with S3 and NFS

Stateless is used as a solution for everything nowadays: scale, containerize, make fault tolerant… But it is a reality that not everything can be stateless, even if you store the configurations inside the containers, you need to store static files somewhere, you need databases that are stateful by definition, and not all the tools that you will be using are created to be stateless.

So at one point or another, it is necessary to also have solutions in place in order to run statefull applications. In this article I will descrive the 2 solutions that can fulfill this requirements, NFS/EFS and Minio/S3, and use them to create a Docker Local Registry and a Jenkins running on a Swarm cluster.

The tools I am choosing allow you to create cloud infrastructure, which is the right way to go, but not limiting where you can deploy it, the idea is to become cloud provider agnostic.

NFS and EFS

EFS is a quite new storage solution on AWS, it uses standard NFSv4 protocol but adding automatic scalability and different options to fine tune IOPS and lantencies, another great feature is multiAZ configurations. On this example I will be using an NFS server installed locally in our office lab, either using traditional NFS servers or EFS is transparent for docker swarm, is done the same way.

Let’s take a look at the example I used in my previous article, create a Swarm Service for jenkins:

docker service create --name jenkins-fg -p 8082:8080 -p 50000:50000 -e JENKINS_OPTS="--prefix=/jenkins" --mount "type=bind,source=/mnt/swarm-nfs/jenkins-fg,target=/var/jenkins_home" --reserve-memory 300m jenkins

v6oq1shzs51fw6q8d2bstkd2f

What’s special in this command is the --mount option, against the more traditional docker volume -v. You can read the official Docker documentation on how to configure mounts.

Obviously the same NFS mount should be configured in all the nodes of your Swarm, this will allow for the containers to start in any of the different nodes without any data or configuration lost.

Minio.io and S3

Minio is a distributed object storage server built for cloud applications and DevOps. It allow us to self host S3, that we will be using when installing the local Docker Service Registry. In this way the private registry will be able to have persistent data for the Docker Images and if necessary we can even synchronize S3 buckets between on-premises infrastructure and the AWS cloud.

Installing Minio

To install Minio as a Swarm Service we can create a Docker Compose file, in this file we will be also using NFS as a mount.

On the official Minio documentation to deploy on Docker Swarm, they create a distributed system with 4 containers. For the sake of simplicity I had created a different docker-compose file:

version: '3'

services:

minio1:

image: minio/minio:RELEASE.2017-03-16T21-50-32Z

volumes:

- /mnt/swarm-nfs/minio:/export

ports:

- "9001:9000"

environment:

MINIO_ACCESS_KEY: accesskey-example

MINIO_SECRET_KEY: key-example

deploy:

restart_policy:

delay: 10s

max_attempts: 10

window: 60s

command: server /export

This docker compose file will map the port 9001 to the port 9000 inside the container, will use a folder on the NFS mount and map it to the folder /export inside the container and will execute the command server /export when the service boots, which starts the Minio application.

To start a service from a docker compose file we need to use the docker stack deploy command:

docker stack deploy --compose-file=docker-compose.yaml minio

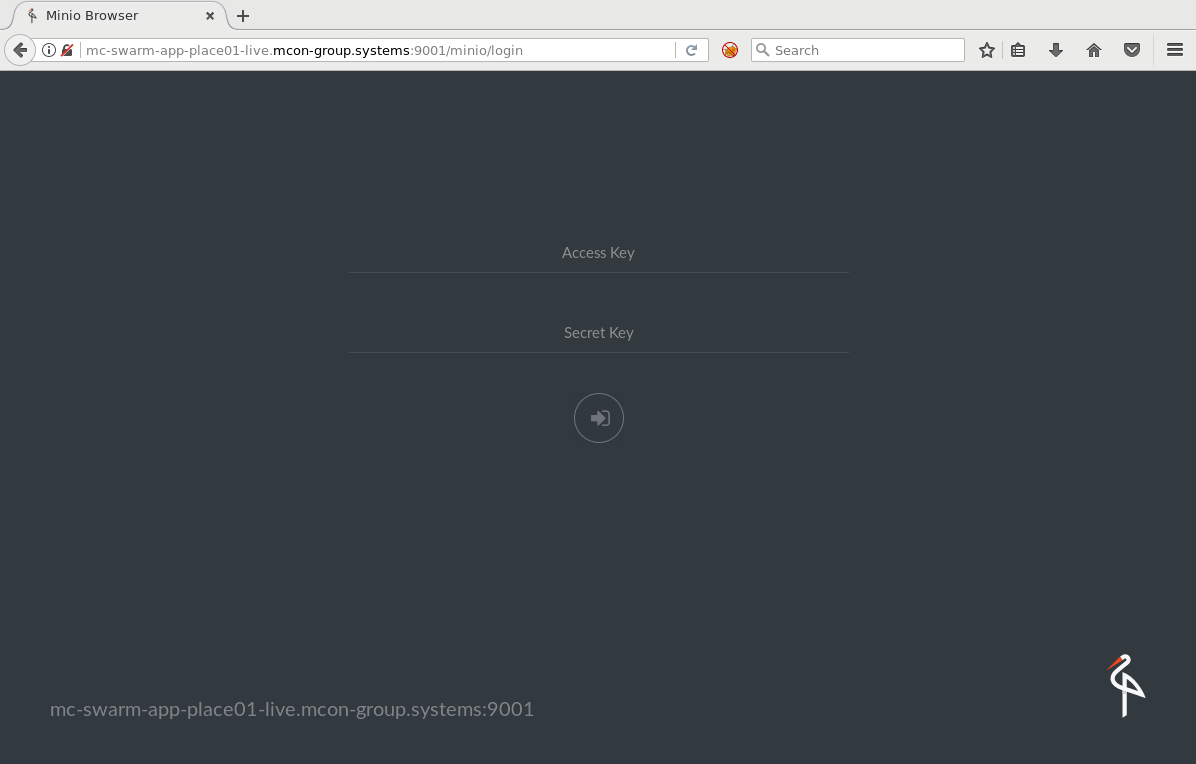

We can reach this application on the IP of any of the Swarm nodes on port 9001.

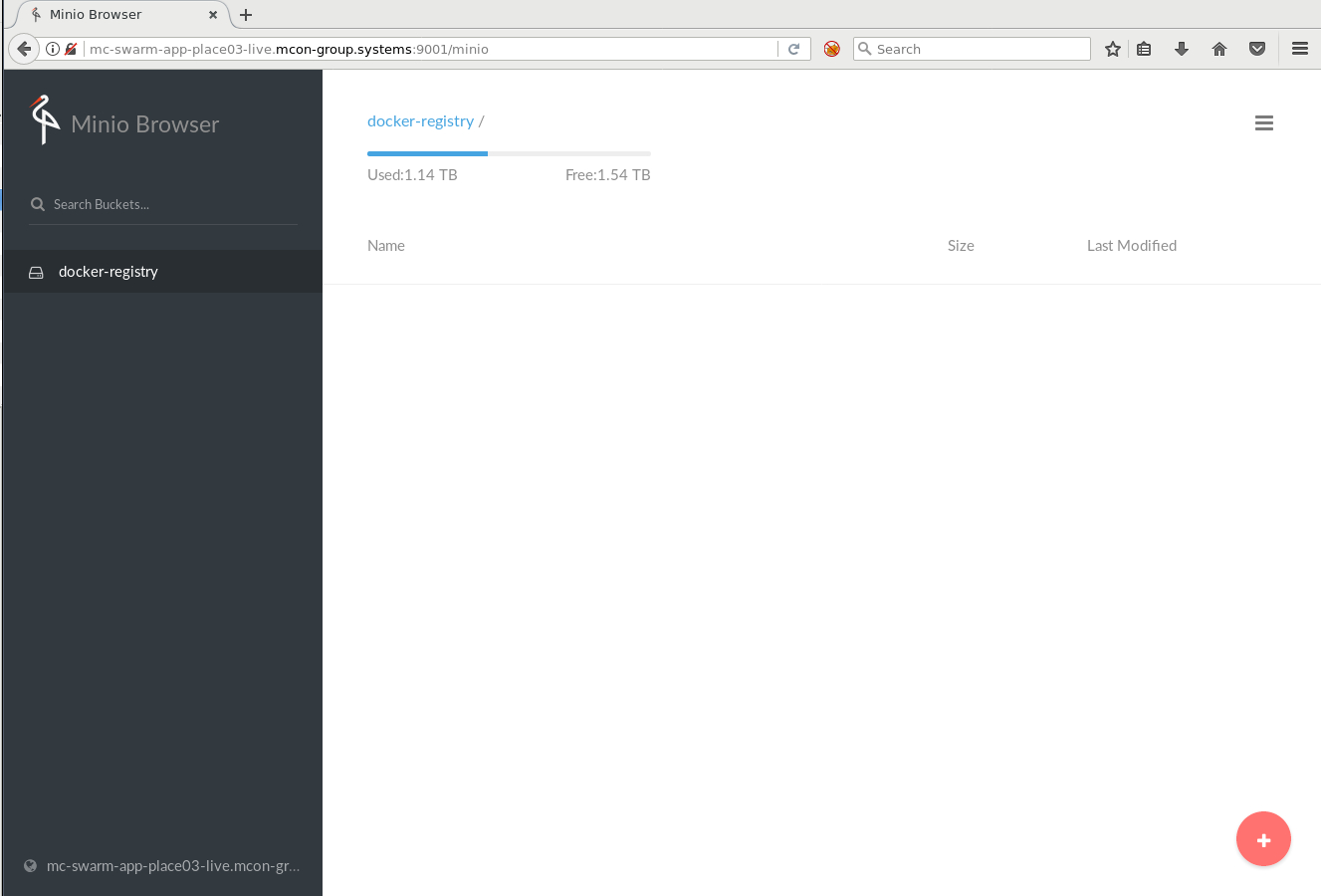

Then we create an S3 bucket for our Private Docker Registry.

Local Docker Registry using s3 and NFS

In case your organization can’t or doesn’t want to use the public docker hub to store it’s containers, you should use a Private Docker Registry.

Tho allow the Local Docker Registry to Switch between nodes of the Swarm while having access to the stored Docker images and all the data the container needs to be recreated, we will be using both NFS(for the TLS certificates) and S3(to store the Docker images).

I had created a docker-compose file that configures both solutions for us:

version: '3'

services:

registry:

image: registry:2

volumes:

- /mnt/swarm-nfs/registry/cert:/cert

ports:

- "5000:5000"

environment:

REGISTRY_STORAGE: s3

REGISTRY_STORAGE_S3_ACCESSKEY: accesskey-example

REGISTRY_STORAGE_S3_secretkey: key-example

REGISTRY_STORAGE_S3_BUCKET: docker-registry

REGISTRY_STORAGE_S3_REGIONENDPOINT: http://minio1:9001

REGISTRY_STORAGE_S3_REGION: us-east-1

REGISTRY_LOG_LEVEL: debug

REGISTRY_HTTP_TLS_CERTIFICATE: /cert/domain.crt

REGISTRY_HTTP_TLS_KEY: /cert/domain.key

deploy:

restart_policy:

delay: 10s

max_attempts: 10

window: 60s

This example mounts a folder on the NFS to store the TLS certificates and uses the S3 bucket we created with Minio to store the Docker images.

#docker stack deploy --compose-file=docker-compose.yaml registry

You can find more information about the registry and how to use it on the official documentation.